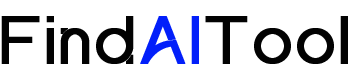

Data Normalizer is a powerful AI-driven tool designed to streamline and enhance data standardization processes across various formats, including Excel, CSV, Python, R, and SQL. This innovative software tackles the common challenges of data inconsistencies, spelling errors, and abbreviation discrepancies, offering a quick and efficient solution for data normalization.

At its core, Data Normalizer employs advanced techniques such as fuzzy matching, fuzzy search, and Levenshtein distance algorithms to intelligently process and standardize data. This approach ensures that even complex datasets with multiple variations and errors can be effectively normalized, saving users significant time and effort in data preparation tasks.

The software is particularly beneficial for data analysts, researchers, and business professionals who regularly work with large datasets from diverse sources. Its user-friendly interface makes it accessible to both technical and non-technical users, allowing for seamless integration into existing workflows.

By using Data Normalizer, organizations can dramatically improve their data quality, leading to more accurate analyses, better decision-making, and increased operational efficiency. The tool’s ability to handle various file formats and programming languages makes it versatile across different industries and use cases.

Data Normalizer not only simplifies the data cleaning process but also enhances data consistency across an organization. This uniformity is crucial for effective data integration, reporting, and analytics. With features like automatic error correction and standardization of abbreviations, the software significantly reduces the risk of data-related errors and misinterpretations, ultimately leading to more reliable insights and improved data-driven strategies.