The NSFW Image Detection API is a powerful tool designed to identify and filter explicit or sensitive content in images. This advanced API utilizes AI algorithms to analyze uploaded images and provide a numerical score indicating the likelihood of NSFW content, ranging from 0.0 to 1.0. With its quick and efficient processing capabilities, the API offers accurate detection of inappropriate material across various image formats.

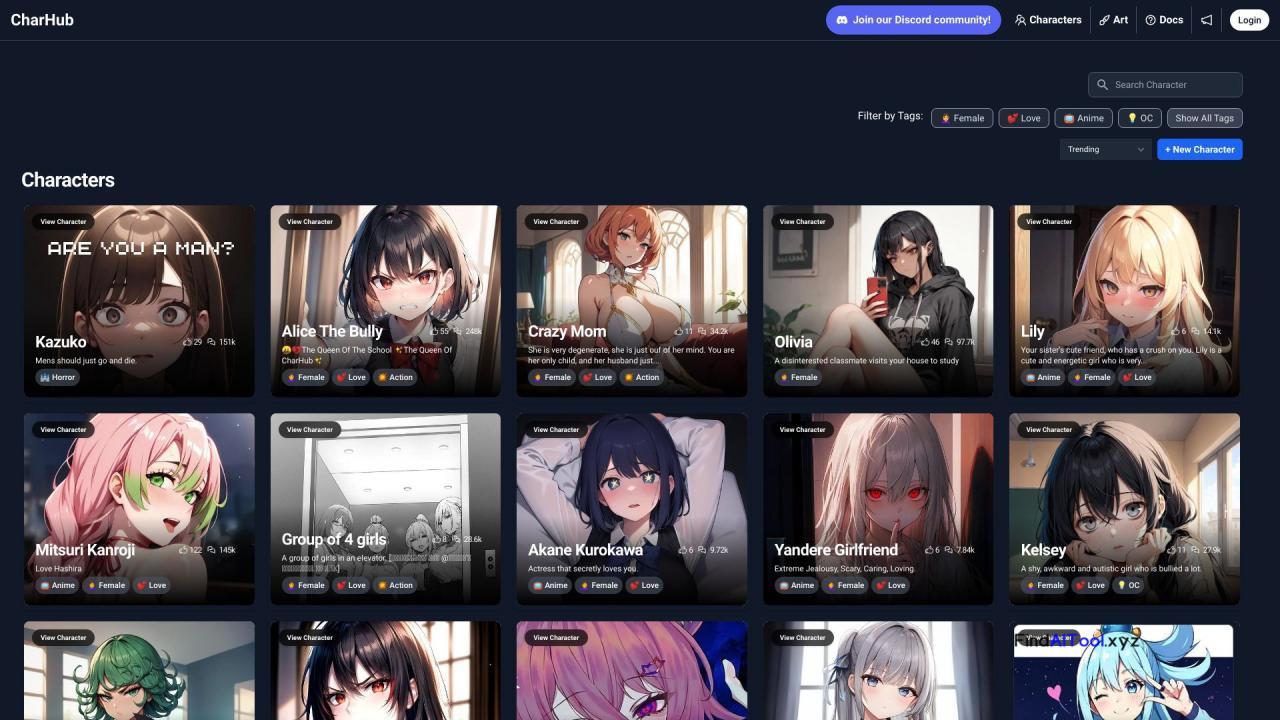

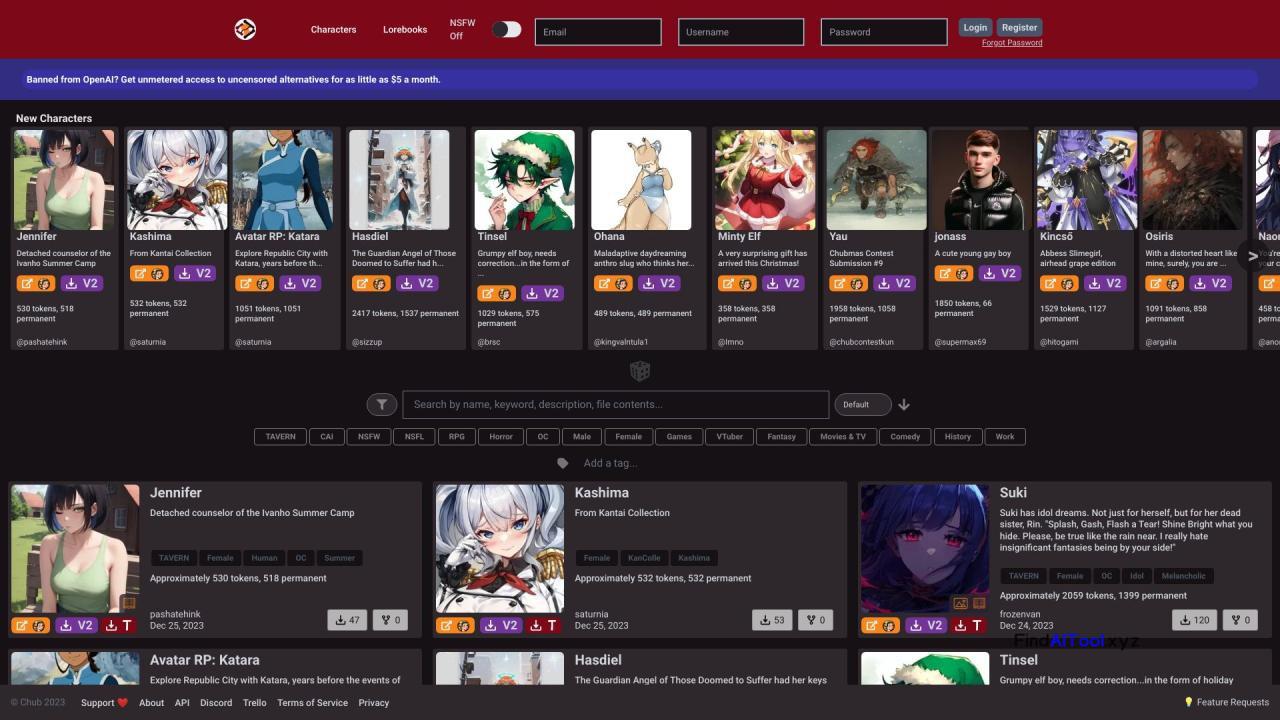

This versatile software is particularly valuable for content moderation on social media platforms, enhancing user safety in online communities, and filtering explicit content in image search engines. Its core features include precise NSFW content detection, a nuanced scoring system, and rapid image processing, making it an essential tool for maintaining safe and appropriate online environments.

The NSFW Image Detection API is ideal for platform administrators, content moderators, and developers working on user-generated content sites. It’s also beneficial for companies prioritizing brand safety and those creating family-friendly online spaces. By implementing this API, users can significantly reduce the risk of inappropriate content exposure, streamline moderation processes, and uphold community standards more effectively.

By providing an automated, reliable solution for NSFW content detection, this API helps organizations save time and resources while improving user experience and safety. It enables proactive content filtering, reduces manual moderation efforts, and helps maintain a positive online atmosphere, ultimately contributing to a safer and more trustworthy digital ecosystem.